Introduction: AI Meets BI Dashboards

Business intelligence (BI) dashboards are evolving from static reports into interactive, AI-driven decision tools. Large language models (LLMs) like DeepSeek are at the forefront of this transformation, bringing conversational intelligence and automated insights to dashboards.

By integrating AI in dashboards – essentially embedding an LLM within BI platforms – organizations can query data in natural language, generate insights on the fly, and even automate analysis and reporting.

The result is a dashboard that not only visualizes data but also interprets it, enabling faster, smarter decisions. In this article, we’ll explore how DeepSeek augments BI dashboards with conversational querying, insight generation, and automation, and provide practical guidance on integration, comparisons with other LLMs, example use cases, and considerations for privacy and deployment.

Why LLMs Like DeepSeek Are Valuable for BI

Traditional BI tools excel at presenting charts and tables, but they often rely on users to manually explore data and derive conclusions. DeepSeek, an advanced open-source LLM platform, can dramatically enhance this experience by automatically analyzing data, understanding context in plain English, and generating insights or answers on the fly. Here’s why incorporating an LLM like DeepSeek into BI dashboards is so powerful:

Conversational Querying: DeepSeek enables natural language question-answering on your data. Users can simply ask questions in everyday language and get instant answers, without writing SQL or clicking through filters. For example, instead of manually filtering a report, a manager could ask “Which region had the highest sales growth this quarter?” and DeepSeek will interpret the data to respond with the region and key figures. This conversational BI approach makes data accessible to non-technical users and speeds up analysis. (Notably, Twilio built an AI assistant that converts analysts’ plain English questions into SQL on their Looker data, automating what used to be a tedious querying process.)

Automated Insight Generation: DeepSeek’s models excel at finding patterns and anomalies that manual analysis might miss. They can detect outliers, perform sentiment analysis on text feedback, or summarize trends automatically. Your dashboard can highlight why a metric spiked, not just show that it did. It’s like having a virtual analyst constantly scanning the data for notable changes. For instance, if weekly revenue suddenly drops, DeepSeek could immediately flag the contributing factor – say, a decline in a specific product line or region – and provide a brief explanation on the dashboard.

Predictive Analytics and Recommendations: Beyond explaining historical data, DeepSeek can perform forward-looking analysis. Thanks to strong reasoning and math capabilities, it can recognize patterns and forecast future metrics or detect likely anomalies before they happen. A DeepSeek integration might project next quarter’s sales based on trends or warn that a certain KPI is likely to fall outside normal ranges. These AI-driven forecasts and recommendations (e.g. suggesting inventory adjustments or marketing actions) help companies move from reactive reporting to proactive planning.

Automated Narratives & Data Storytelling: One of the most powerful features is letting DeepSeek generate narrative summaries of your data – essentially automating the commentary and reporting process. After analyzing a dataset or an entire dashboard, DeepSeek can produce a written summary of key findings, and even suggest visuals to include. For example, it might add an “executive summary” text box to a dashboard that highlights the quarter’s performance drivers (e.g. “Enterprise revenue declined 8%, mainly due to lower Q2 retention in APAC”). These narratives update as data updates, giving stakeholders a quick, plain-English briefing alongside charts. Analysts save hours on writing reports or slide decks because the automated business insights are generated instantly.

Accelerated and Consistent Decision-Making: By combining the above capabilities, DeepSeek transforms a dashboard from a passive tool into an active advisor. Leaders get concise explanations and recommendations in seconds, instead of waiting days for an analyst’s report. Every user sees the same data-driven narrative, ensuring consistency in insights. This AI augmentation bridges the gap between raw data and decisions, helping teams focus on action rather than interpretation. In short, an LLM-augmented dashboard becomes an interactive, conversational interface to your data, rather than just a static display.

Integrating DeepSeek into BI Platforms

DeepSeek can be integrated into popular BI platforms – Power BI, Tableau, Looker, or even custom dashboards – through APIs and extension frameworks.

In practice, integration involves calling DeepSeek’s API (or using its open-source models directly) to fetch AI-generated answers or insights, and then displaying those within your dashboard. Below we outline how DeepSeek integration works for major BI tools, with practical examples:

DeepSeek Integration with Power BI

Microsoft Power BI’s flexibility (especially its Power Query engine) makes it straightforward to incorporate DeepSeek. The typical approach is to use Power BI’s ability to call web APIs so that you can send a query to DeepSeek and retrieve the result as part of your data model. Here’s a high-level integration workflow:

- API Access: First, obtain a DeepSeek API key by signing up on the DeepSeek AI platform. This key will allow your Power BI queries to authenticate with DeepSeek’s service.

- Power Query Call: In Power BI Desktop, use Power Query to call the DeepSeek API. You can create a Blank Query in the Power Query Editor (e.g., named

DeepSeek_API_Call) and write an M script that sends an HTTP request to DeepSeek for each record or question in your dataset. Power Query’sWeb.Contentsfunction is used to POST a prompt (such as a row’s “Question” field or any text you want analyzed) to the DeepSeek endpoint, including your API key in the header. The API’s JSON response, which contains the model’s answer, is then parsed and added as a new column (say “AI_Answer”) in your data table. - Refresh and Use Insights: After adding this custom column via the query, you apply changes so that Power BI pulls the data. For each row (or each prompt), an API call is made and the AI-generated answer populates the new column. For example, if one prompt was “What is the capital of France?”, the new column would return “The capital of France is Paris.” as the answer. You can then use these AI answers in your report – for instance, displaying them in a table next to the questions, or using them in cards/tooltip explanations for visuals.

- Interactive Q&A: This integration effectively allows natural language Q&A within Power BI. You might set up a scenario where users type a question into a Power BI parameter or a table (as a sort of search box), and the model’s answer appears on the report. For instance, you could have a table with one row where a user enters a question like “Which product category saw the biggest drop in profit last month?” – after refresh, DeepSeek’s answer (e.g. “Electronics saw the biggest drop, with profits down 15% due to higher return rates”) would be shown. While the above approach runs on data refresh (or on schedule), you can also explore using Power BI’s real-time capabilities or Power Automate to trigger DeepSeek calls so that the Q&A feels instantaneous. (Be mindful of the number of API calls if your dataset has many rows – you might want to restrict queries or cache results to control costs and performance.)

Overall, integrating DeepSeek with Power BI is very customizable – you can create AI-generated measures, columns, or tooltips that enhance your existing visuals. It essentially embeds a language model into your BI workflow, enabling dynamic insights directly in Power BI reports.

Tableau Integration

Tableau can also be augmented with DeepSeek, though it requires a different approach since Tableau doesn’t natively support calling external APIs in calculations. A common method is to leverage Tableau’s extension and scripting APIs. Two practical options are:

- Using TabPy (Python integration): Tableau has an external services API (TabPy) that lets you call Python code from calculated fields. By deploying a small Python service that calls the DeepSeek API, you can route user questions from Tableau to DeepSeek and display the answers. For example, you would set up TabPy locally or on a server, then in Tableau create a parameter for user input (e.g. a string parameter “User Question”) and a calculated field using

SCRIPT_STRthat passes the parameter to your Python function. The Python function (running via TabPy) takes the question and perhaps some context data, callsdeepseek.ask()(or the REST API) and returns a text answer. When a user types a question (say, “Why did our West region sales spike in July?”) and hits enter, Tableau sends this to the Python service, DeepSeek processes it, and the answer is returned and shown in Tableau (for instance, in a text box visualization). This essentially gives you a “Ask why” feature in Tableau. One practitioner described the experience as “Imagine asking Tableau ‘Why did whiskey sales spike in July?’ and getting contextual answers — powered by an LLM.” With DeepSeek behind the scenes, the dashboard can explain chart trends in plain language. - Using Dashboard Extensions: Tableau supports JavaScript-based dashboard extensions. You could build a custom extension that calls the DeepSeek API directly from the dashboard (using JavaScript when a user clicks a button or asks a question) and then displays the response on the dashboard. This approach might not require running a separate TabPy server, but does need web development skills and careful handling of API keys. Some no-code platforms (like Make.com or Appy Pie) even allow connecting Tableau events to external APIs like DeepSeek. For example, an extension could trigger whenever a user selects a data point or whenever new data is loaded, and then fetch a DeepSeek-generated insight for that context.

Use Cases in Tableau: Once integrated, conversational analytics becomes possible. You can implement features like a “Why?” button next to a chart – when clicked, it sends the chart’s data (or filters) as context to DeepSeek and returns an explanation of the pattern.

DeepSeek can also perform computations or advanced analysis on the fly; for instance, the user could ask for a sentiment summary of customer comments for the selected product, and the LLM’s answer (positive/negative trend) could be displayed instantly (bypassing the need to precompute sentiment in your data source).

Essentially, Tableau’s visuals are enhanced by an AI commentary layer: the dashboard not only shows what is happening but, with DeepSeek, can assist in explaining why and what to do next. It elevates Tableau from a purely visual analysis tool to an interactive, conversational BI system.

(It’s worth noting that Tableau is itself introducing AI features – like Salesforce’s Einstein GPT integration and a native “ask data” LLM interface – which underscores the demand for such capabilities. Using DeepSeek, especially as an open model, lets you achieve similar results on your own terms.)

Looker (Google Looker & Looker Studio) Integration

Google’s Looker platform (and the lighter Looker Studio) can integrate with DeepSeek to enable natural language exploration and AI-driven insights in a few ways:

- Looker Extension (in-app assistant): Looker has an extension framework that allows custom web apps to live inside the Looker interface. Google has even open-sourced a Looker “GenAI Dashboard Extension” that uses their Vertex AI models to let users chat with their data. You can build a similar extension but swap in DeepSeek as the LLM backend. In practice, the extension would provide an “Ask AI” chat box on a Looker dashboard. When a user asks a question, the extension can gather context (like the dashboard’s current filters or a summary of the visible data) via Looker’s API, send that as a prompt to DeepSeek, and then display the answer right there in the dashboard. The answer could be text or even a generated chart suggestion. This method is powerful because it all happens within Looker’s UI seamlessly. For example, a sales manager viewing a dashboard could click Ask AI and query, “What were the main drivers of the revenue drop last month in Europe?” The extension could compile relevant metrics from the dashboard (e.g. revenue by country, product, month) and prompt DeepSeek, which might respond with something like, “European revenue fell 5%, mainly due to a 12% drop in Germany where our Q4 promotion ended. Declines were highest in the Electronics segment.” – all shown next to the charts.

- Natural Language to SQL (external assistant): Another approach, demonstrated by Twilio’s BI team, is to use an LLM to translate questions into queries using Looker’s data model (LookML). In Twilio’s case, a virtual assistant took a user’s question, consulted Looker’s schema to understand the data, and generated the appropriate SQL which could then be run on the database. With DeepSeek’s strong reasoning and code generation abilities, you could build a similar helper outside the Looker UI. For instance, a user asks in a chat app or Slack, “Show me total signups vs. cancellations by week last quarter.” Your application would find the relevant dimensions/measures via the Looker API or LookML, prompt DeepSeek with something like “Generate SQL for signups and cancels by week from looker_model.x”, and get back a SQL query or a result description. That SQL can then be executed against the data warehouse, and the results returned or even pushed into a Looker dashboard. This LLM-as-query-engine approach can make data exploration much faster for analysts, though it may require careful prompt engineering and validation (to ensure the SQL is correct). DeepSeek’s open nature allows fine-tuning if needed to better understand your company’s schema and terminology, improving accuracy of the generated queries.

- Scheduled Insight Generation: Even without interactive chat, DeepSeek can automate Looker reports by running behind the scenes. For example, Looker’s Action Hub could be set to send data or alerts to a DeepSeek API on a schedule. Imagine each morning Looker triggers an export of yesterday’s sales metrics to a small script that feeds it to DeepSeek. DeepSeek then generates a brief narrative analysis (e.g., “Yesterday’s sales were 5% above average, driven by a surge in Product X sales in the Northeast.”) and emails that to the team or writes it back to a dashboard tile. This way, every day starts with an AI-generated summary of performance. Similarly, you could have a “Generate Insights” button on a dashboard that, when clicked, compiles all the dashboard’s charts/data into a prompt for DeepSeek, which then returns a paragraph of key takeaways covering all metrics. This is essentially what Google’s upcoming Gemini AI in Looker aims to do – using an LLM to consider all dashboard tiles and produce context-aware insights. With DeepSeek, you can achieve a custom version of this future today, tailoring it to your needs and even the tone or format you prefer.

Custom Dashboards and Other Platforms

If your organization uses a custom-built dashboard or a different BI tool, you can still integrate DeepSeek with a bit of development work. The general pattern is the same: use the tool’s scripting or API capabilities to send data or queries to the DeepSeek model and display the results.

For a web-based custom dashboard, you might call DeepSeek’s REST API via a back-end service or directly from front-end code to enable features like a chatbot overlay or automatic narrative annotations on charts.

Many integration platforms (such as Make.com or Pipedream) provide pre-built connectors for DeepSeek and BI tools, which can simplify setting up triggers and actions (for example, “when new data is uploaded, ask DeepSeek for key insights and post them to the dashboard”).

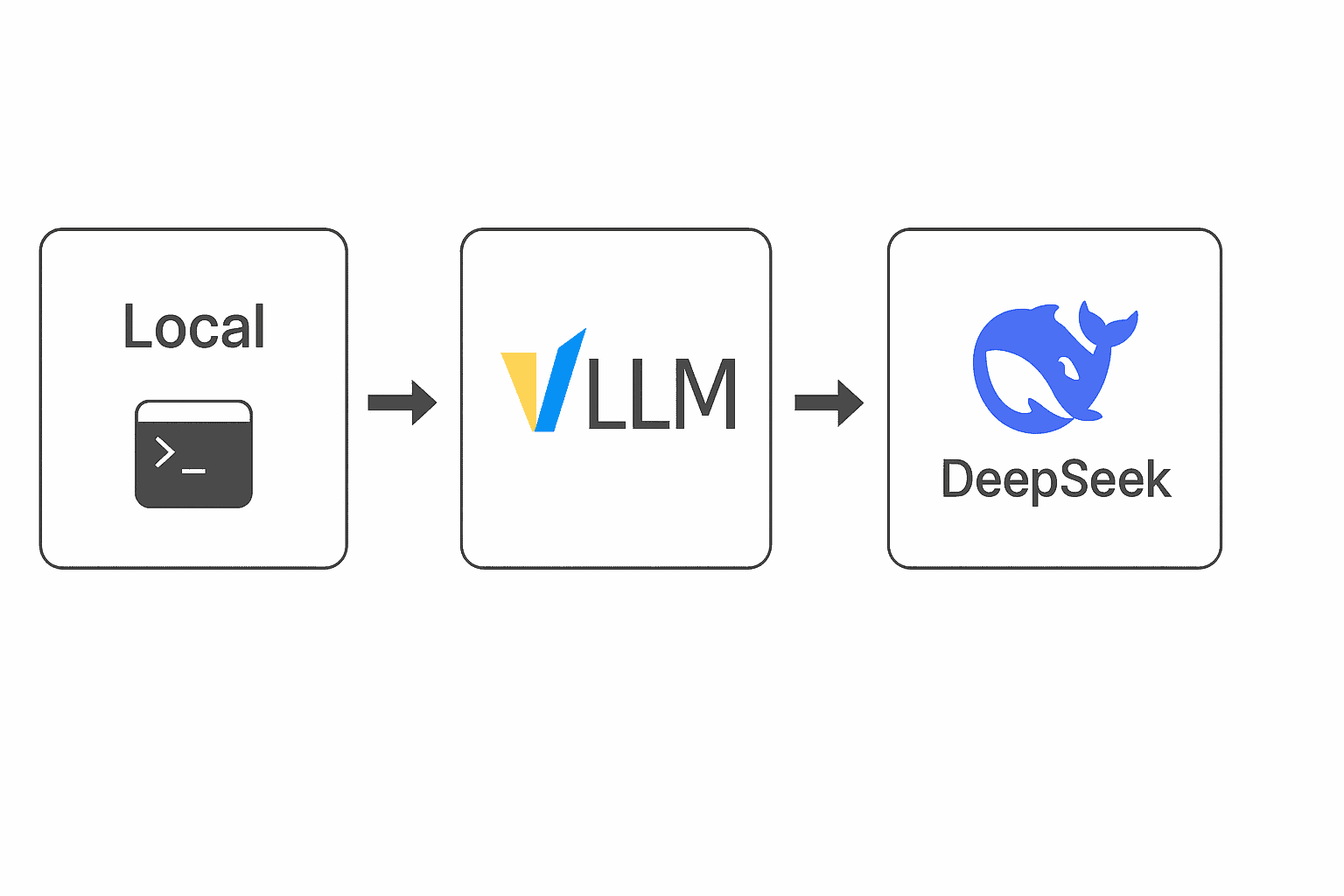

Because DeepSeek is open-source, another option is to run the model locally and expose it via an API to your internal applications – this way, your dashboard can call the model on-premises without data leaving your environment.

Key Integration Takeaway: No matter the platform, integrating an LLM follows a similar flow – user asks or AI monitors → data is sent to model → model returns narrative/answer → dashboard displays it. By implementing this, your BI tool gains a conversational interface and an automated analyst working alongside your charts.

The examples above show that whether it’s DeepSeek integration with Power BI using Power Query or hooking into Tableau/Looker with scripts, the result is a more intelligent dashboard that delivers insights proactively.

Example Prompts and AI-Driven Workflows

To illustrate how DeepSeek-powered dashboards can be used in practice, consider the following example scenarios and prompts:

- Diagnostic Q&A: “Explain the drop in revenue last quarter based on the uploaded sales dashboard.” – An analyst might present this query to DeepSeek after updating a sales dashboard with Q4 data. The model would analyze the sales metrics, compare segments and time periods, and produce an explanation of the revenue drop. For instance, it might highlight that enterprise renewals declined significantly in APAC in Q4, causing an 8% revenue dip. The explanation could read: “Revenue declined 8% last quarter, primarily due to lower retention in Q2 enterprise accounts. The drop was most pronounced in the APAC region, driven by a 22% decrease in renewals.” This kind of answer gives management a clear reason why revenue fell, drawn directly from the data.

- Automated Dashboard Summaries: “Generate 3 key takeaways from this week’s performance dashboard.” – A product manager could ask DeepSeek for the top insights from a weekly KPI dashboard. DeepSeek would effectively read all the charts and figures on the dashboard and return a succinct summary. For example, the model might output a brief list of bullet points, such as:

- Bookings: Total bookings fell by 11% week-over-week, mainly due to a delay in contract closures within the healthcare segment (mid-market tier).Customer Churn: Churn rate increased to 17% for Q2 cohorts, particularly among users onboarded during the January campaign, with lower satisfaction scores and longer support resolution times contributing to the drop-off.Inventory Alert: Inventory at Distribution Center 4 is running 23% below forecast; at this pace a stock-out is projected within 5 business days, indicating a need to replenish stock urgently.

- Interactive “Why” and “What-if”: Beyond explicit prompts, a DeepSeek-augmented dashboard can support interactive exploration. A user might click on a spike in a chart and trigger a behind-the-scenes prompt like “Explain the cause of the spike in metric X on date Y.” The model could then display an annotation on the chart explaining that spike (e.g., a seasonal sale or a one-off event). Similarly, one could ask hypothetical questions: “If our website traffic doubles next month, what is the expected impact on conversions?” – if the model has been fine-tuned or given data relationships, it could attempt to answer with a reasoned hypothesis or direct the user to relevant data (blending predictive modeling with its language understanding). These conversational workflows make the dashboard experience more like talking to a data-savvy colleague who can answer ad-hoc questions and do analysis in real time.

DeepSeek vs. GPT-4, Claude, and LLaMA 2 in BI

There are several LLMs that could be applied to BI tasks – OpenAI’s GPT-4, Anthropic’s Claude, Meta’s LLaMA 2, among others. How does DeepSeek compare, especially for business intelligence use cases? Let’s look at a few key considerations:

- Token Context Window: The context window determines how much data the model can handle in a single query. BI data can be complex or high-dimensional (think entire tables, or lengthy reports). DeepSeek stands out with support for very long context lengths – reportedly up to 128K tokens in its latest versions. This means DeepSeek can ingest massive amounts of dashboard data or text (hundreds of pages) at once. By contrast, GPT-4 typically maxes out at 32K tokens (for the 32k-version) and many Llama 2 models around 4K, while Claude 2 introduced a 100K token window. In practice, a larger context allows the model to consider more data points simultaneously – for example, analyzing an entire quarterly report or a very large dataset in one go. DeepSeek’s 128K context is currently one of the largest among LLMs, exceeding GPT-4’s public context limit and even slightly edging out Claude’s 100K. For BI teams, this means DeepSeek could potentially take in all the text from a complex dashboard (captions, metrics, descriptions) or raw data excerpts without needing to summarize or chunk it first. Complex queries that require understanding multiple charts or documents are more feasible with a big context window.

- Performance and Reasoning: DeepSeek is a frontier model that the community has benchmarked as on par with many OpenAI, Claude, and Meta models in reasoning and knowledge tasks. DeepSeek’s largest variant (R1 at 671B parameters with a Mixture-of-Experts architecture) was designed to rival top-tier proprietary models in quality. For instance, DeepSeek’s 67B model has been shown to slightly outperform LLaMA 2 70B on reasoning, coding, math, and even some language comprehension tasks. The practical implication is that DeepSeek can handle the analytical reasoning needed for BI use cases (explaining causality, doing math on the data, generating correct formulas) at a level comparable to the best closed-source LLMs. Its ability to do chain-of-thought reasoning (and even output the reasoning if needed) can increase transparency in how it arrives at answers – a useful feature when you need to trust its explanations. GPT-4 is widely regarded as extremely capable in reasoning and nuanced Q&A, and Claude is known for lengthy but coherent explanations – DeepSeek aims for that caliber while remaining open.

- Open-Source vs. Proprietary (Deployment Flexibility): One of DeepSeek’s biggest advantages is that it’s open-source and self-hostable. You can download DeepSeek models (available in sizes like 7B, 14B, 67B parameters, or distilled smaller versions) and run them on your own hardware. This is not an option with GPT-4 or Claude, which are cloud-only; you must use their APIs or platforms. LLaMA 2 is also open-source (under a permissive license for commercial use), so it similarly allows on-prem deployment. For a cost-sensitive or highly secure BI environment, being able to self-host an LLM is crucial. API pricing for GPT-4 and Claude can become expensive when analyzing large datasets or frequent queries – for example, OpenAI’s GPT-4 32K context model has a cost per 1K tokens (input and output) that can add up quickly for enterprise usage. Claude’s pricing (for its API with 100K context) is also usage-based. In contrast, running DeepSeek or LLaMA in-house has no per-query fees – you incur hardware and maintenance costs instead. According to DeepSeek’s developers, its design (using Mixture-of-Experts) is highly cost-efficient, claiming up to 95% lower cost per token in operation compared to some other models. This can make a big difference if your BI solution will be making thousands of queries or scanning huge data dumps daily.

- Customization and Fine-Tuning: Every company has its own metrics, jargon, and business logic. Adapting an LLM to understand these specifics can greatly improve its usefulness in BI. DeepSeek and LLaMA 2, being open, allow fine-tuning on your own data or domain. You could fine-tune DeepSeek on your data warehouse’s SQL logs, or on a corpus of internal reports, to teach it the precise language and context of your business. This kind of fine-tuning can align the model’s outputs with company-specific knowledge (for instance, knowing that “ACV” means annual contract value, or understanding your product hierarchy). OpenAI’s models like GPT-4 historically did not allow third-party fine-tuning (though OpenAI has started offering limited fine-tuning for GPT-4 in 2024 via their managed service, it’s still not the same as having full control) – and even then, your data must be sent to OpenAI for training. Claude does not publicly support user fine-tuning as of 2025. Thus, prompt engineering and retrieval (feeding the model relevant data each time) are the main ways to customize closed models for your BI needs. DeepSeek, on the other hand, can be both prompt-engineered and fine-tuned. Even without full fine-tuning, its open nature means you can adjust system prompts heavily, or even modify the model if you have the machine learning expertise. Moreover, the community-driven development of DeepSeek means there may be domain-specific variants or community fine-tuned versions (for finance, marketing, etc.) that you can leverage. In summary, for aligning the AI with company-specific metrics and vocabulary, open models like DeepSeek and LLaMA2 give you more control, whereas GPT-4/Claude require careful prompt design and possibly using vector databases to inject domain knowledge.

In choosing an LLM for your BI dashboard, consider the trade-offs: GPT-4 is extremely powerful out-of-the-box but comes with ongoing costs and data going to a third-party cloud; Claude offers an exceptionally large context (great for reading long reports) but similarly is a paid cloud service; LLaMA 2 is free and can be run locally, but you need AI engineering effort to match its performance to the others.

DeepSeek strikes a middle ground – it is open-source and can be self-hosted (so you avoid API costs and can keep data internal), yet it was built to compete with top-tier models in capability. Its large context window and cost-efficient design make it attractive for BI use cases where you might feed entire dashboards or big data slices into the model.

Many teams might even use a hybrid approach: e.g., run a local DeepSeek 7B model for quick, low-cost queries, but call GPT-4 via API for the hardest questions – the integration patterns described earlier would allow either.

The good news is that the BI workflows (natural language querying, automated insights, etc.) are not tied to one model; you can experiment and choose what mix of models gives the best accuracy and value for your scenario.

Data Privacy and Compliance Considerations

Integrating an AI model with enterprise dashboards inevitably raises questions about data privacy, security, and compliance. Sensitive business data is at stake, so it’s crucial to deploy DeepSeek (or any LLM) in a way that meets your organization’s policies and regulatory requirements.

If you use DeepSeek via its cloud API (or the hosted chat platform), any query you send – which might include company data or queries about that data – will be processed on DeepSeek’s servers. According to DeepSeek’s privacy policy, data from their services is stored on secure servers in the People’s Republic of China.

This has two implications: (1) your data could be retained (the retention terms are not very clear), and (2) if you operate in regions like the EU, sending data to a service under Chinese jurisdiction might not be compliant with GDPR or other data protection laws.

In fact, the lack of clarity around data usage has led some governments and companies to restrict or ban the use of DeepSeek’s app and website on official devices. For example, as of early 2025, certain countries (Italy, Taiwan, Australia) and U.S. states/agencies banned DeepSeek over privacy concerns.

On-Premises Deployment: The safer alternative is to leverage DeepSeek’s open-source models in an on-premise or private cloud environment. When you download the DeepSeek model and run it on your own servers, no data needs to leave your network – queries and responses stay within your control.

Deploying locally eliminates the external privacy risk inherent in cloud APIs. Many enterprises favor this approach for any AI that will handle confidential or proprietary information. The trade-off is that you need sufficient hardware (GPUs, memory) to run the model.

DeepSeek R1 67B or 70B models are large and may require high-end GPU clusters, though smaller distilled versions (e.g. 7B or 14B parameters) can run on a single modern server or even a powerful laptop for testing.

The integration steps we discussed (for Power BI, Tableau, etc.) work similarly with a self-hosted model – you would just point your API calls to your local model’s endpoint instead of DeepSeek’s cloud URL.

Compliance: If you’re in a highly regulated industry (finance, healthcare, government), check how using an LLM interacts with regulations. Some considerations:

PII and Sensitive Data: Ensure that any personally identifiable information or sensitive data isn’t inadvertently sent to the model without proper anonymization, especially if using a third-party API. With a local DeepSeek, you have more freedom to analyze sensitive data since it’s not leaving your secure environment.

Auditability: When DeepSeek provides an insight that influences a business decision, you might need to document how that conclusion was reached. DeepSeek R1’s chain-of-thought feature can be useful here – it can output its reasoning steps. While not typically shown to end-users, such verbose reasoning could be logged for internal audit or debugging, giving some transparency.

Access Control: Not everyone should be able to ask the BI assistant any question they want (imagine someone querying HR or finance data that they shouldn’t see). Leverage your BI tool’s security model – e.g., if a user only has access to certain data in the dashboard, ensure the DeepSeek integration only runs on that data. This may mean embedding filters or user context into the prompts. If using a central DeepSeek service, it should enforce authentication and perhaps even row-level security logic.

Data Retention: If using the cloud API, consider that queries and responses might be stored on the provider side. OpenAI, for instance, offers options to not store data for API customers. For DeepSeek’s API, you’d need to review their terms. With self-hosting, you define how long (if at all) any query logs are kept. It’s good practice to purge or not log raw data within the AI pipeline if not needed.

In summary, cloud API vs. on-premise boils down to risk tolerance and convenience. Cloud deployment (whether DeepSeek’s or another LLM’s) is quick to set up but means trusting a third party with your data.

On-premise gives you full control and privacy, aligning better with strict compliance, at the cost of infrastructure and maintenance. Many enterprises may start by prototyping with the cloud API (using non-sensitive sample data) and then migrate to a private deployment for production.

DeepSeek’s open-source nature uniquely enables this flexibility – you could even run it within an air-gapped environment if needed, something impossible with strictly cloud LLMs. Always involve your security and compliance teams early when planning an AI integration into dashboards, to ensure the solution meets all guidelines.

Future Outlook: From Passive Reports to Conversational BI

The rise of DeepSeek and other LLMs in BI signals a fundamental shift in how we interact with data. Dashboards of the future won’t be static collections of charts that end the conversation once the numbers are presented – instead, they’ll begin a conversation. We’re moving toward dashboards that are active participants in decision-making.

Imagine a scenario a year or two ahead: as soon as you open your dashboard in the morning, an AI assistant (powered by something like DeepSeek) greets you with “Good morning, here are the important changes in yesterday’s metrics and what they mean.”

You ask follow-up questions in plain language – “How does this compare to last quarter?” or “Which customer segment should we focus on to improve this?” – and the answers and suggestions appear instantaneously, with relevant visuals. The AI might even trigger certain actions: “Shall I draft an email to the sales team about the drop in the West region?” This isn’t sci-fi; components of it are already emerging.

Microsoft and Salesforce are integrating GPT-4 into their analytics and CRM tools, and open-source projects like DeepSeek demonstrate that you can build your own AI-augmented analytics without waiting for vendors.

The role of the analyst will evolve. Rather than spending the bulk of time creating reports and manually interpreting data, analysts will train and guide these AI tools, curate the right data for them, and focus on validating and acting on the insights.

Dashboards will become dialogue partners – a place where users don’t just read metrics but discuss them. This conversational capability lowers the barrier for non-analysts to get value from data (they can simply ask in natural language), which democratizes BI access across an organization.

It also means decisions can be made with greater speed and context; the AI can continuously watch data streams and nudge humans when something important happens, along with an explanation of why.

We should also expect more automation in storytelling and decision support. LLMs like DeepSeek can already draft pretty coherent narratives from data. Future improvements (and larger “frontier” models) will make these narratives even more insightful, perhaps even creative in suggesting innovative solutions to problems identified in the data.

Dashboards might auto-generate slide decks for the weekly business review meeting, with each slide’s commentary written by AI and vetted by an analyst. They might simulate scenarios (“digital twins” of business metrics) by combining predictive modeling with narrative (“If current trends continue, we’ll hit $X in sales – here’s why and what we might do to exceed that”).

DeepSeek and similar LLMs are changing BI dashboards from passive visual reports to active, conversational advisors. They bring the “why” and “what’s next” directly into the BI layer.

Companies that embrace this will likely gain a competitive edge – decisions will be made faster and with fuller context, and analytical insights won’t be bottlenecked by human bandwidth. Of course, human expertise remains crucial: these AI tools must be guided, and their outputs verified for accuracy and bias.

But when implemented responsibly, an LLM-augmented dashboard can essentially bridge the gap between data and decision in real-time. As one practitioner noted, it turns a dashboard into an “AI-assisted advisor” rather than just a report.

In conclusion, Using DeepSeek in Business Intelligence dashboards is an opportunity to supercharge your analytics – enabling LLM for BI workflows that were not possible just a few years ago.

Whether it’s through conversational Q&A, automated business insights generation, or proactive analytics, the integration of LLMs heralds a new era where dashboards do more of the heavy lifting.

By carefully comparing options (DeepSeek vs. GPT-4 vs. others), addressing privacy needs, and following best practices in integration, dashboard developers and product managers can build the next generation of BI tools: ones that not only show data, but also understand and talk about it. The future of BI is here – and it speaks in natural language.